In the year 2010, if you were to ask anyone in the digital product innovation space to define ‘user interface’, you would have received a standard and commonly accepted definition: ‘a physical manner through which a computer can interact with a digital or mechanical system, typically through use of a screen, keyboard, mouse, controller, or finger (touch).’

Fast forward to today, 2016, and the lines are already blurred. Apple, Google, Microsoft, and recent frontrunner Amazon (see Stuzo’s article on Alexa and Amazon Echo) have all made advancements in voice interface, a new type of user interface that relies solely on a user’s spoken command to interact with a digital product experience. Facebook, Microsoft, and Amazon have all announced new ‘bots’ products, which allow businesses to integrate voice and text message interface features with 3rd party applications. Consider now the progress these companies have made with these technologies in just 5 short years, the speed at which AI (artificial intelligence) is advancing, and moore’s law. I would not be surprised to find the next 5-10 years of voice interface and AI innovation (which some have popularized calling Zero UI, noted in FastCo) exceed current projections.

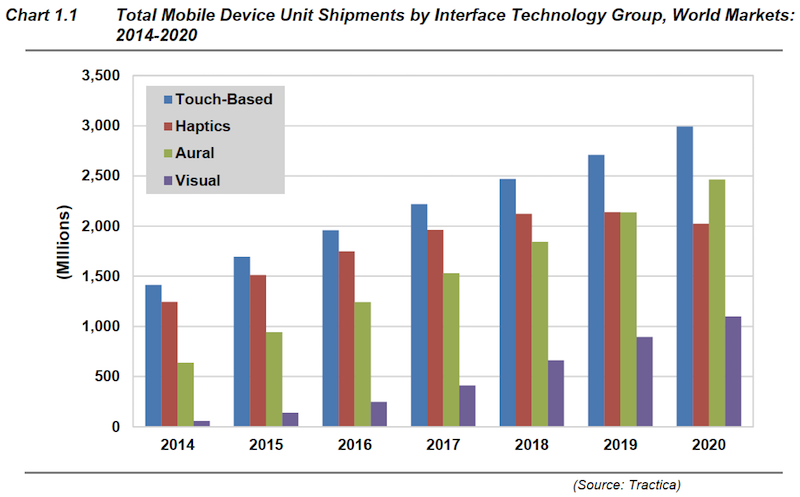

For reference, an in-depth report produced by Tractica released in Q2 2015, titled Emerging Interface Technologies for Mobile Devices, projects growth of “Visual Interfaces” (gesture recognition and eye tracking) to reach over 1 billion units shipped by the year 2020 (chart below).

Let’s look out to the year 2025 and think about the implications these technical innovations will have on our businesses, on the market, and on our daily lives.

First, for those of us who work in digital product innovation, how will this rapid innovation transform our business?

Today, when we approach user experience and user interface design, we typically think about the user’s first and primary point of interface being a screen (whether a smartphone screen, tablet, laptop, kiosk, and now even virtual or augmented reality screen). However by 2025, these screens, as the primary point of interface between a person and a digital product, will no longer take first seat.

In the future, designing a user experience will require consideration for a variety of user interfaces all working together to produce the desired outcome. A UX designer will no longer be beholden to wireframes and clickable rapid prototypes. UX designers will have to consider many inputs including:

- Screens

- Voice inputs

- Combination of voice input and eye tracking (think: augmented reality + voice interface)

- Sensory inputs, such as a person’s current health status (think: heart rate)

- The amount of light or heat in a room

- A user’s acceleration, or any number of other sensory feeds, and much more.

UX designers will need to plan out a series of events, inputs, and outputs, that may all happen without any type of screen or physical interaction, and find a way to illustrate these interactions in a simple and intuitive manner to business stakeholders, product engineers, and investors. This introduces a heightened level of UX planning complexity.

Andy Goodman, Group Director of Design Strategy at Accenture Digital, said:

“If you look at the history of computing, starting with the jacquard loom in 1801, humans have always had to interact with machines in a really abstract, complex way.”

The interesting paradigm is that in the future, complexity will be removed from the interface and handled by the systems. Companies in the business of digital product innovation will be forced to adapt and evolve to the ever-changing world of the user interface and write new definitions for what it means to provide an intuitive user experience.

Next, let’s think about implications to the global market.

Just about every industry in the world can benefit from advancements in digital product user interface. Consider a couple hypothetical experiences:

In Healthcare, imagine a surgeon is in the operating room during a stressful and fast-paced procedure. The surgeon calls out to the medical staff to prepare for a mission-critical step in the procedure. However, it’s not just the human medical staff that’s listening in. The voice-activated AI medical assistant is also aware of the upcoming step in the procedure and hears the surgeon’s call. As the nurses gather together the necessary instruments, the AI medical assistant changes the lighting in the room, changes the camera angles for the video-assisted devices, switches all the data visualization on the monitors to the most important vital signs, and starts providing the surgeon with a new set of vitals directly into the surgeon’s earpiece, by voice. Humans and machines, working together to ensure the optimal outcome for the patient. Imagine yourself as the user experience designer responsible for architecting the user interfaces for this complex system.

In Manufacturing, consider the job of a foreman walking her factory floor. This foreman of the future will primarily manage machines and systems, not people. His job will be to ensure the computer-assisted assembly line and all associated systems are running at optimal efficiency. Will he be walking the factory floor with an iPad? Perhaps. But that’s not how he’ll see her world. As he walks the floor with a specially designed pair of augmented reality (AR) glasses, focuses his eyes on a particular machine, and squints ever-so-slightly to “zoom in”, her AR glasses start feeding his output metrics from the machine, visually, as an overlay directly on top of the machine she’s looking at. He raises his hand and points his finger at a component of the machine highlighted in yellow. The component is operating at lower energy efficiency compared to the last week. He asks, “When did this component start using more energy?” The earpiece of his AR glasses responds, “Last night, at precisely 10:15pm, this component started utilizing 10% more energy compared to the night before.” The AR system then sends a live video feed of the inner workings of the component to the foreman’s iPad, allowing him to tap on the component and open up a troubleshooting interface. Imagine yourself as the user experience designer responsible for making it easy for the foreman to find, diagnose, and solve a complex problem on the factory floor.

Finally, how will these innovations change our daily lives?

Consider the millennial mom of the future, now 35 years old, working as an executive in a fast-growing company and trying to manage her life with two young children. Let’s call her Diana. It’s a Tuesday morning, 7:00am in Hoboken, NJ. The kids need to be fed and sent out the door for school, and Diana has an important client call with London starting at 7:30am. Thankfully, Diana has Ava, the hypothetical home automation assistant of the future, working alongside her to ensure everything runs smoothly. Think of Ava as a central “brain” of the house. Ava receives live feeds from sensors across the house. Ava knows the kids are on their way downstairs to the kitchen and turns on the toaster to prepare their favorite bagel. Ava knows school starts at 7:45am and sends a command to the family’s car, which turns on, exists the garage, and pulls out front at precisely 7:25am, when the kids run out the door and jump in. Ava sends a live video feed from inside the car as it drives itself and the kids to school. On a live video feed between Diana’s tablet computer and the family’s autonomous car, just before Diana’s 7:30am call, she says goodbye, blows a kiss to her children, and wonders how she would ever keep her life in order without Ava.

But Ava’s job isn’t done yet. Ava has access to Diana’s calendar and knows that Diana will not be driving to corporate headquarters this particular Tuesday morning. As such, Ava changes Diana’s autonomous car’s programming, enabling Uber driving mode between 8am and 10am rush hour. Ava works with Uber to drive the car around town helping other people with their morning commutes and earning the family some spare cash.

Imagine yourself on the user experience team and engineering team responsible for making Ava simple and intuitive for Diana’s family to program. Diana’s family uses Ava to automate their daily lives and interface with all the other digital products and services their family uses at work, at school, and at home. Your job on this digital product innovation team is not to make a screen look pretty. It is to understand the complexity of human life, understand the technological capabilities of Ava, and make Ava accessible to the masses.

The Future of Digital Product User Interface

Now, having pontificated on interfaces of the future and how they affect our business, industry, and our daily lives, how do we define the future user interface?

A user interface is the voice command a person gives to a microphone that’s connected to any one of many connected devices in their world (connected devices also referred to as Internet of Things, or IoT).

A user interface is the action taken by an augmented reality experience when a user’s heart rate increases rapidly.

A user interface is the act of someone feverishly waving goodbye as their significant other departs on a train. A smartwatch senses the user waving and is listening to what the user is saying. Through automated dictation, the smartwatch sends a message to the departing user’s screen, “I love you and will miss you.”

A user interface is a nano-bot circulating through a user’s bloodstream, continually monitoring blood-glucose level and sending data to the user’s secure, private health cloud, which then sends health data directly to the user’s phone screen as an alert when the user’s blood-glucose level is out of acceptable tolerance.

The digital product user interface of 2025 connects everything in our world to us, and us to everything else in our world. It turns anything we can measure or interpret into data and works to turn that data into outputs that automate and improve our lives. The user interface of 2025 is therefore a productivity interface, as the sole intent of interfaces and the discreet components of their systems, is to produce better outcomes for users.

The user experience designer of today evolves into the productivity designer of tomorrow, as their job is to take complex real-world experiences and make them work for the user, not against them. The user interface designer of today evolves into interpretation designers or translation designers of tomorrow, as their job is to take a huge array of sensory inputs and translate them into digital actions which automate activities for the user.

I am excited to see and participate in Stuzo’s embrace of these bleeding edge technologies today and the company’s preparation for the productivity interface of tomorrow. This is what it means to work for a true Digital Product Innovation company.

———

Join Stuzo and other forward leaning companies in paving the way to a new interface paradigm. Contact us and tell us about your digital product innovation challenge.